The Build: Peeling back the layers of native LLM application deployments

Sep 11, 2024

A story birthed at the intersection of Computer Science and Software Engineering

I have been wanting to write this blog post for a very long time. So bear with me. I started my academic career as a Computer Science major, this was back in the late 90s. And that meant that most of the code I wrote was written exclusively for teachers to determine whether I had mastered some algorithm or data structure. So even though I was writing code, the truth was, I was not building applications. I didn’t have to build and ship anything to production. I would submit a C program or a C++ program, maybe the ‘exe’ too, and that was that.

I didn’t get exposed to Software Engineering until I did an internship late in undergrad. And even then, I was doing quality assurance and didn’t understand much about maintaining an application in production let alone shipping one.

Fast forward a decade or so, and now I am doing machine learning. At this time I had switched from writing code in C++ to Python. I didn’t really understand the implications of that switch until much, much later. Like most people doing machine learning, data science, AI, or any major applied computational science, our main goal is to solve some math-like problem using code. For example developing an algorithm to prove that you can find the fastest route in a map using some new and improved version of a technique. For those of us trained to do this, our real intention with writing code isn’t to necessarily create software, but use code to solve some tedious matrix-like problem. Once we have done that, we write a paper and share our findings with the world.

I had my first encounter with reproducibility when I tried to implement algorithms that I had read about in papers. If I was lucky, there would be an ArXiv paper, and an attached GitHub repo with the code. And that is where the problem would start. The code is the instruction set that represents the solution; however, it does not contain the instructions on how to build that code in order to recreate the conditions that were on the developer/scientist machine that allowed them to produce the results in the paper.

What I have just described is what is commonly known as the crisis of reproducibility in science. This problem has existed as far back as our practice of science itself. And when it comes to mathematical sciences like computational sciences, machine learning, and so on, it becomes a bit more complicated.

Computer scientists for the most part are people trained and concerned with the foundations of computation and information. And as a science, computer science usually follows the scientific process of observation, question, hypothesis, experimentation and so on. Now, it so happens that we can apply the principles and techniques of computer science to solve problems and create usable software. When we use these principles and techniques, we are practicing software engineering. Even though the two are related, one being the application of the other, the motivations of the practitioners are very different. Shipping usable software is an engineering process, a process that is chiefly concerned with the creation of functional, reliable and efficient solutions.

When computer scientists like myself graduate from school, we learn to become useful software engineers, we often learn on the job and things move along. Only until recently did things become muddled. Thanks to ChatGPT, our complex machine learning research projects became mainstream. Big tech companies like Google, Meta, and so on open-sourced* their large language models (LLM aka genAI) and builders started playing around with them. Usually when a model like GPT3 drops, ML folks want to play with it and see what we can build from it. And the way we do that is through a process called finetuning. A model like GPT3 is a foundational model. These types of models are generally good at doing a lot of things but may be subpar in a certain area. Because we know that the foundational model has a good ability to learn new knowledge if given examples, we can finetune the model by giving it examples from our own domain so that we can derive a newer model that does excellently in our own domain.

A thousand demos of these LLM enabled projects bloomed! However; after the initial wave of excitement passed, it turned out that many of the projects that builders were sharing were not in fact built from models that they had finetuned themselves, but we mere API wrappers calling foundational models. There are several reasons for this is how things turned out, a lot of classically developers were coming into the genAI space for the first time and didn’t know how to actually ship these products. Many of these new developers did not know how to finetune models let alone move them into production (prod). And this is the secret we are not sharing with the public, genAI has a deployment problem.

Deployment is the process of making software available to users. Practically it involves the process of going from a git to prod, i.e, taking the developed code, building it, testing it, and delivering it to the production environment. This is where the engineering process comes in. Programming languages like C++, are compiled languages which are closer and give you more control over hardware. To build an application in C++, you install a C++ compiler, and you explicitly compile your C++ program by running the compiler and specifying an output file, which is the artifact that is generated as a result of the compilation. What this compilation process does is it translates the source code to machine code. This machine code is what can be directly executed by a computer’s processing unit like a CPU or GPU.

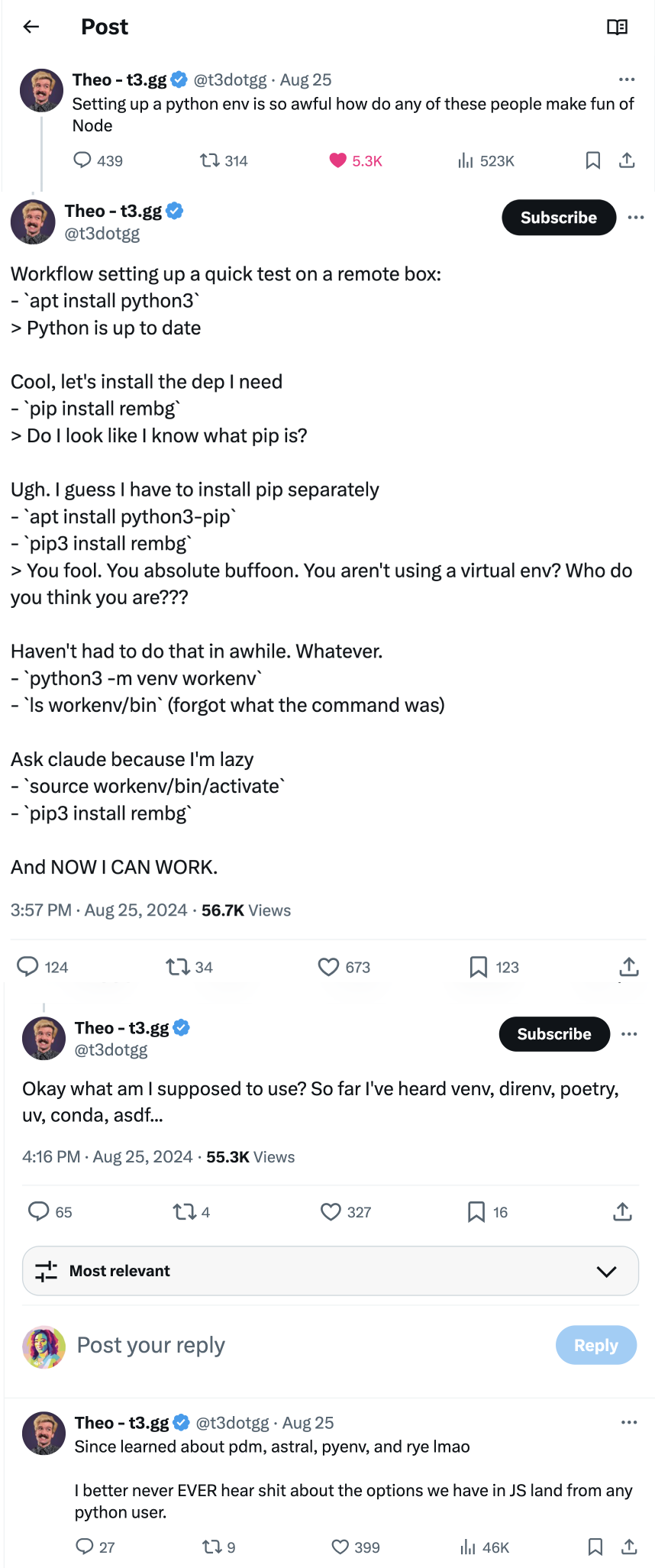

Whereas programming languages like python are interpreted languages, meaning that there is no compilation required. For these types of languages, the source code is translated to machine code on-the-fly. As you run the program, it is being translated block by block to machine code. To build a python application, you install python, write the code and as you write the code, you will realize that you need to use the capabilities in external libraries, so you will need to also install those, and then once all of that is done, then you run the application. By default, there are no artifacts generated as part of the process. So to deploy a python application, a developer needs to install dependencies, i.e. libraries that the application depends on, and then package and build the application. Managing dependencies and building and packaging the application is the process that makes deploying the application often tedious and difficult. And to make matters worse, there are multiple ways to achieve the goal of each of the steps which is a nightmare scenario for reproducibility.

There is a lot of emphasis paid to code but not enough paid to the final artifacts that are run by processors; the actual thing that the end user interacts with. For compiled programs these artifacts are application binaries. For interpreted programs on the other hand since they don’t go through compilation, developers have to find another way to ship these applications to production.

Open source LLMs like Llama are very complex python applications. It is pretty straightforward to share the code and share the weights. It is quite difficult to reproduce the application because there are a lot of moving parts. For example, they require a specific type of hardware chip (GPU) to train or deploy them. Outside game developers and bitcoin miners, most developers don’t have the skills or patience required to reproduce code that works with GPUs. Further, applications like LLMs are a different flavor of problem-solving. LLMs are very, very close to mathematics science experiments with independent variables (code, data), dependent variables (model), and control variables (machine configuration, code dependencies, ecosystem dependencies). To faithfully replicate the experiment pipeline to get the dependent variable requires tooling that often isn’t available to developers outside big tech companies with massive research labs.

One way to think of the deployment process is to see it as a scientific experiment that is conducted in a series of stages. The reason why it helps to adopt this analogy is for two reasons. First, deployment means that developers can replicate applications and their environments, i.e., solve the “it works on my machine problem,” and second, they can answer the question of “what changed” between experiment runs aka build runs. But before I outline all the stages of deployment, it is important that I talk about the human side of application development.

Applications are often developed by a group of people within a larger more complex organization. There are usually product managers, software developers, infrastructure engineers, devOps engineers, quality assurance engineers and a few other roles. All of these people have to cooperate to get the job done. Because of their varied expertise, sometimes it becomes difficult to communicate clearly within the group. So the job of deployment is not only to go from git to prod, but for a group of people with disparate skills to go from git to prod in a way that can be repeated over and over again, and can be done with transparency and clarity. Not only are applications created by a group of people, the applications themselves in most cases use software that was not created by the group. Most modern applications use open source software and bring in the solutions of other teams through APIs. This means the modern technology stack and ecosystem is quite complex which makes the work of deployment that much harder.

Now, let’s go back to the stages of deployment. It usually starts with a product manager who designs a product or feature that solves a problem. They write up requirements and design documents and pass that on to the development team. These developers write code to implement the feature. Once they have done that, they test the code locally on their own machines to make sure that what they have built is indeed what the product manager designed. Once the developers are satisfied with their tests, they move on to the next stage: packing the code and moving it to a staging environment. This part is very important because it is the forcing function that forces us to reproduce our work. A piece of code that solves a specific problem is like a particular science experiment. The developer is the scientist in this case that runs the experiment, and as part of sharing the result for peer review, they need to first reproduce it in an environment where others can examine it and give feedback. And like a scientific experiment, it is important to keep track of all the variables that are used in the experiment.

Moving an application from a local environment to a staging environment means faithfully replicating all required items and their state in the local environment to the staging environment. For applications that are written in compiled languages like C and C++, that task reduces down to copying over the application binary, and keeping track of some performance metrics. For applications in interpreted languages like python, it means replicating like for like of the application environment in local to staging. One way teams get around this is to use containerized environments like Docker. With the use of containers developers can create and isolate their application development environment in an artifact that can be easily copied from server to server. However, this isn’t enough because LLM applications are very complex. For one, containers do not run directly on hardware, they operate on top of a runtime environment like Docker Engine.

NOTE: This is a great place to pause and reflect for a minute. To get an LLM application in front of users, first there has to be a computer hosting that application, we call that computer a server. That server has an operating system installed that allows it to be operational. The LLM application is written in a programming language called python, that python application is built, packaged and delivered in a container. The container cannot work directly on the hardware of the server, instead it operates on top of a container runtime that abstracts away the operating system. However, LLM applications are rather hardware dependent, so developers will need a solid repeatable and reproducible way to make sure they are talking to the hardware via the runtime abstraction. And oh, most developers have not had enough experience with applications and tools that deal with hardware. I hope it is clear now why deploying LLM applications are tedious and downright difficult

To ensure that an application build is reliable, every time the development team builds the application, they need to be able to compare all the artifacts that are generated as a result of that build with the previous artifacts that were generated the last time they ran the build. The goal of the development team is to maintain a stable, reliable, repeatable process in time, i.e., if I build it today and build it in two years, using the same exact materials, that the resulting artifact that is generated will be the same. Further, the development team also needs to prove to themselves that the application is stable in space, i.e., the size of the artifact created is the same, and that given a deterministic use case, it will use the same amount of resources.

So to fully reproduce an application in a staging environment where teammates and stakeholders can review it, reproducibility must be achieved at the hardware architecture level in each locus of space, time, and the application environment. If the system that is used to migrate from local to staging to production meets these three stringent requirements, that system has achieved a reproducible build.

When the application is successfully migrated to the staging environment, the stakeholders examine it and give their feedback. Usually there is some more work to be done, something or the other to patch or update, and the process starts over again. As in a scientific experiment, every run of that process is a unique experiment and the work of a modern build system is to capture all the information about the experiments in a way that is free from manipulation from external entities, so that we can successfully validate the hypothesis that is the application and invariably when something goes wrong we can quickly answer the question of what changed.

Creating the modern build system is equivalent to productizing the scientific process and that is exactly what my team and I are doing at Fimio. If you found this post interesting and want to chat more about it feel free to reach out to me on X @omojumiller.

Share